Creating an EKS cluster in AWS is an easy task with Terraform. We will start off with looking for the respective module in Terraform registry (https://registry.terraform.io/modules/terraform-aws-modules/eks/aws/18.26.3). We will be using version 18.26.3 in this article.

Quick Start

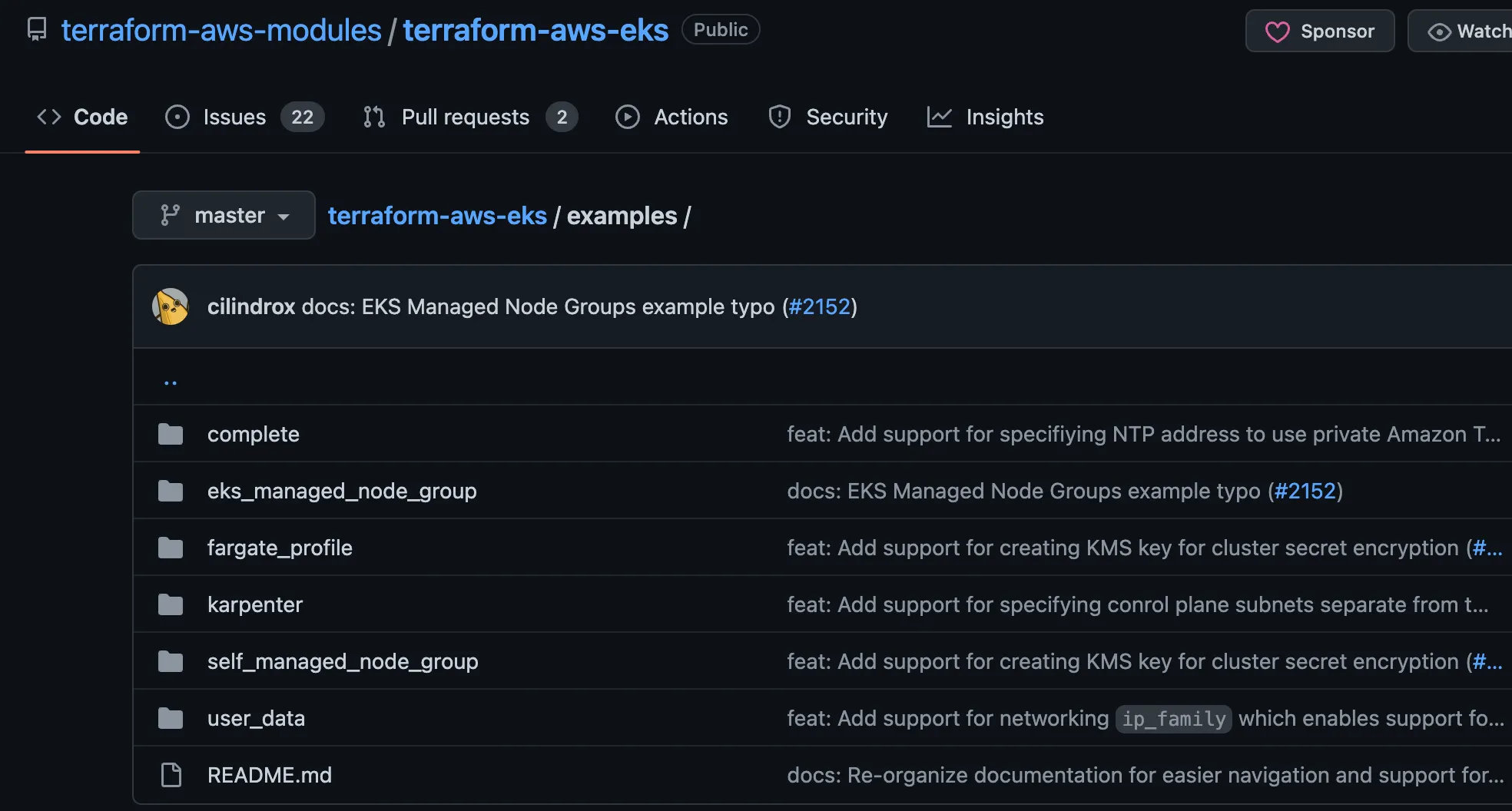

We can always refer to the GitHub repo for examples (https://github.com/terraform-aws-modules/terraform-aws-eks/tree/master/examples) to quick start our deployment.

Manual Definition

We can also create our own EKS definition using the documentations shown in the Terraform registry for the AWS EKS module. We will need to refer to the INPUT (https://registry.terraform.io/modules/terraform-aws-modules/eks/aws/18.26.3?tab=inputs) section in the documentations to create our HCL files.

The HCL files should contain the definition for our EKS cluster and the node groups we require. We will be using EKS managed node groups for this article and it's sufficient for most use cases unless you require customizations for your own kubernetes nodes.

A good practice will be to set the node group defaults so that we do not have to repeat the specifications for each node groups we have.

The sample terraform configuration does not include additional node groups other than the default node groups. If you wish to create additional node groups, you can add in under the eks_managed_node_groups as follows (take node of the node selector and tolerations:

# new Node Group

new_node_group = {

name_prefix = "new-nodegroup-"

desired_capacity = 2

max_capacity = 4

min_capacity = 2

instance_types = ["c5n.large", "c5a.large", "c5.large"]

# Node selector

k8s_labels = {

dedicated = "new-nodegroup"

}

# Tolerations

taints = [

{

key = "dedicated"

value = "new-nodegroup"

effect = "NO_SCHEDULE"

}

]

additional_tags = {

Name = format("%s-new-nodegroup", local.name)

}

}

The taints and toleration allow us to tag our deployments to this node group during deployment.

An important variable to take note will be max_unavailable_percentage which allow us to specific what is the maximum unavailable percentage of nodes during any update of node groups. For the maximum stability for production cluster, you can even set this to 0. However, it will take even longer to update your node groups.

If you refer to the terraform configuration shown below, the node group defaults to SPOT instances while the default node group is ON-DEMAND. This is my personal preferences as the default node group are usually used to contain essential services that are critical to the cluster such as CoreDNS, Load Balancer Controller, etc. As such, I prefer to have them scheduled on the on-demand nodes to ensure maximum stability as spot instances will be recalled by AWS as and when they require the capacity back.

Further diving into the terraform configuration below, you will see that the node groups are provisioned in the private subnets while the control plane is provisioned in the public subnets. For the best security, it's advisable to provision the control plane in the private subnets. However, for this example, we will use the public subnets so that we can call the kubernetes api from my local computer which is outside AWS network.

I have included my own copy of Terraform HCL files for your reference. Please feel free to edit to your own requirements.

Terraform HCL files

provider "aws" {

region = local.region

default_tags {

tags = {

Project = local.project

}

}

}

################################################################################

# EKS Module

################################################################################

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 18.0"

cluster_name = local.name

cluster_version = local.cluster_version

# ID of the VPC where the cluster and its nodes will be provisioned

vpc_id = local.vpc

# A list of subnet IDs where the EKS cluster control plane (ENIs) will be provisioned.

control_plane_subnet_ids = local.public_subnets

# A list of subnet IDs where the nodes/node groups will be provisioned.

subnet_ids = local.private_subnets

cluster_endpoint_private_access = true

cluster_endpoint_public_access = true

cluster_endpoint_public_access_cidrs = ["0.0.0.0/0"]

# create OpenID Connect Provider for EKS to enable IRSA

enable_irsa = true

# Default for all node groups

eks_managed_node_group_defaults = {

disk_size = 100 # default volume size

disk_type = "gp3" # gp3 ebs volume

disk_throughput = 150 # min throughput

disk_iops = 3000 # min iops for gp3

capacity_type = "SPOT" # default spot instance

eni_delete = true # delete eni on termination

key_name = local.key # default ssh keypair for nodes

ebs_optimized = true # ebs optimized instance

ami_type = "AL2_x86_64" # default ami type for nodes

create_launch_template = true

enable_monitoring = true

update_default_version = false # set new LT ver as default

# Subnets to use (Recommended: Private Subnets)

subnets = local.private_subnets

# user data for LT

pre_userdata = local.userdata

update_config = {

max_unavailable_percentage = 10 # or set `max_unavailable`

}

}

eks_managed_node_groups = {

# default node group

default = {

name = "default-node"

use_name_prefix = true

capacity_type = "ON_DEMAND" # default node group to be on-demand

desired_capacity = 2

max_capacity = 8

min_capacity = 2

instance_types = ["t3.medium", "t3a.medium"]

}

# Any other Node Group

}

# Security Group

node_security_group_additional_rules = {

ingress_allow_access_from_control_plane = {

type = "ingress"

protocol = "tcp"

from_port = 9443

to_port = 9443

source_cluster_security_group = true

description = "Allow access from control plane to webhook port of AWS load balancer controller"

}

}

manage_aws_auth_configmap = true

# Map your required users

aws_auth_users = var.aws_auth_users

tags = {

Project = local.project

}

}

################################################################################

# Kubernetes provider configuration

################################################################################

data "aws_eks_cluster" "cluster" {

name = module.eks.cluster_id

}

data "aws_eks_cluster_auth" "cluster" {

name = module.eks.cluster_id

}

provider "kubernetes" {

host = data.aws_eks_cluster.cluster.endpoint

cluster_ca_certificate = base64decode(data.aws_eks_cluster.cluster.certificate_authority[0].data)

token = data.aws_eks_cluster_auth.cluster.token

}

################################################################################

# Cluster

################################################################################

output "cluster_arn" {

description = "The Amazon Resource Name (ARN) of the cluster"

value = module.eks.cluster_arn

}

output "cluster_certificate_authority_data" {

description = "Base64 encoded certificate data required to communicate with the cluster"

value = module.eks.cluster_certificate_authority_data

}

output "cluster_endpoint" {

description = "Endpoint for your Kubernetes API server"

value = module.eks.cluster_endpoint

}

output "cluster_id" {

description = "The name/id of the EKS cluster. Will block on cluster creation until the cluster is really ready"

value = module.eks.cluster_id

}

output "cluster_oidc_issuer_url" {

description = "The URL on the EKS cluster for the OpenID Connect identity provider"

value = module.eks.cluster_oidc_issuer_url

}

output "cluster_platform_version" {

description = "Platform version for the cluster"

value = module.eks.cluster_platform_version

}

output "cluster_status" {

description = "Status of the EKS cluster. One of `CREATING`, `ACTIVE`, `DELETING`, `FAILED`"

value = module.eks.cluster_status

}

output "cluster_primary_security_group_id" {

description = "Cluster security group that was created by Amazon EKS for the cluster. Managed node groups use this security group for control-plane-to-data-plane communication. Referred to as 'Cluster security group' in the EKS console"

value = module.eks.cluster_primary_security_group_id

}

################################################################################

# Security Group

################################################################################

output "cluster_security_group_arn" {

description = "Amazon Resource Name (ARN) of the cluster security group"

value = module.eks.cluster_security_group_arn

}

output "cluster_security_group_id" {

description = "ID of the cluster security group"

value = module.eks.cluster_security_group_id

}

################################################################################

# Node Security Group

################################################################################

output "node_security_group_arn" {

description = "Amazon Resource Name (ARN) of the node shared security group"

value = module.eks.node_security_group_arn

}

output "node_security_group_id" {

description = "ID of the node shared security group"

value = module.eks.node_security_group_id

}

################################################################################

# IRSA

################################################################################

output "oidc_provider" {

description = "The OpenID Connect identity provider (issuer URL without leading `https://`)"

value = module.eks.oidc_provider

}

output "oidc_provider_arn" {

description = "The ARN of the OIDC Provider if `enable_irsa = true`"

value = module.eks.oidc_provider_arn

}

################################################################################

# IAM Role

################################################################################

output "cluster_iam_role_name" {

description = "IAM role name of the EKS cluster"

value = module.eks.cluster_iam_role_name

}

output "cluster_iam_role_arn" {

description = "IAM role ARN of the EKS cluster"

value = module.eks.cluster_iam_role_arn

}

output "cluster_iam_role_unique_id" {

description = "Stable and unique string identifying the IAM role"

value = module.eks.cluster_iam_role_unique_id

}

################################################################################

# EKS Managed Node Group

################################################################################

output "eks_managed_node_groups" {

description = "Map of attribute maps for all EKS managed node groups created"

value = module.eks.eks_managed_node_groups

}

output "eks_managed_node_groups_autoscaling_group_names" {

description = "List of the autoscaling group names created by EKS managed node groups"

value = module.eks.eks_managed_node_groups_autoscaling_group_names

}

################################################################################

# Additional

################################################################################

output "aws_auth_configmap_yaml" {

description = "Formatted yaml output for base aws-auth configmap containing roles used in cluster node groups/fargate profiles"

value = module.eks.aws_auth_configmap_yaml

}terraform {

required_version = ">= 0.13.1"

required_providers {

aws = ">= 3.72.0"

local = ">= 1.4"

random = ">= 2.1"

kubernetes = "~> 2.0"

}

}

# Variables

# Map Users

variable "aws_auth_users" {

description = "Additional IAM users to add to the aws-auth configmap."

type = list(object({

userarn = string

username = string

groups = list(string)

}))

default = [

{

userarn = "arn:aws:iam::<account id>:user/<username>"

username = "<username>"

groups = ["system:masters"]

}

]

}

# Local Variables

locals {

name = "my-cluster"

cluster_version = "1.22"

region = "ap-southeast-1"

project = "<project name>"

key = "<key pair in aws>"

vpc = "<vpc id>"

public_subnets = ["<public subnets id>"]

private_subnets = ["<private subnets id>"]

userdata = "" # add any commands to append to user data if needed

}

Creating the Cluster

You can create the cluster by executing the following command:

terraform init

terraform plan

terraform applyAuthenticating with the Cluster

You can authenticate with the cluster using AWS CLI:

aws eks update-kubeconfig --region ap-southeast-1 --name my-clusterTesting Cluster Access

You can test if you can access the cluster using any kubectl commands:

~# kubectl get ns

NAME STATUS AGE

default Active 58m

kube-node-lease Active 58m

kube-public Active 58m

kube-system Active 58mVideo Example

You can refer to the video below for the whole terraform process:

You can refer to the GitHub repository for the complete example (https://github.com/alexlogy/terraform-eks-cluster).

Updates

If you are using the latest version v19.x of the eks module, there are some changes you have to take note based on this upgrade documentation (https://github.com/terraform-aws-modules/terraform-aws-eks/blob/master/docs/UPGRADE-19.0.md).

The most important change would be the introduction of node_security_group_enable_recommended_rules variable which is now enabled by default. As v18.x of the eks module gives you a clean slate for security groups, we have to create additional security group rules ourselves. With v19.x, we no longer have to do so.

Otherwise, you might encounter similar errors like this:

╷

│ Error: [WARN] A duplicate Security Group rule was found on (sg-xxxxxx). This may be

│ a side effect of a now-fixed Terraform issue causing two security groups with

│ identical attributes but different source_security_group_ids to overwrite each

│ other in the state. See https://github.com/hashicorp/terraform/pull/2376 for more

│ information and instructions for recovery. Error: InvalidPermission.Duplicate: the specified rule "peer: 0.0.0.0/0, ALL, ALLOW" already exists

│ status code: 400, request id: xxxx-xxx-xxxx-xxxx-xxxxx

│

│ with module.eks.aws_security_group_rule.node["egress_cluster_jenkins"],

│ on .terraform/modules/eks/node_groups.tf line 207, in resource "aws_security_group_rule" "node":

│ 207: resource "aws_security_group_rule" "node" {

│