Before we go on in this article, please do take note that this is my personal preference in the design of the architecture of my kubernetes clusters.

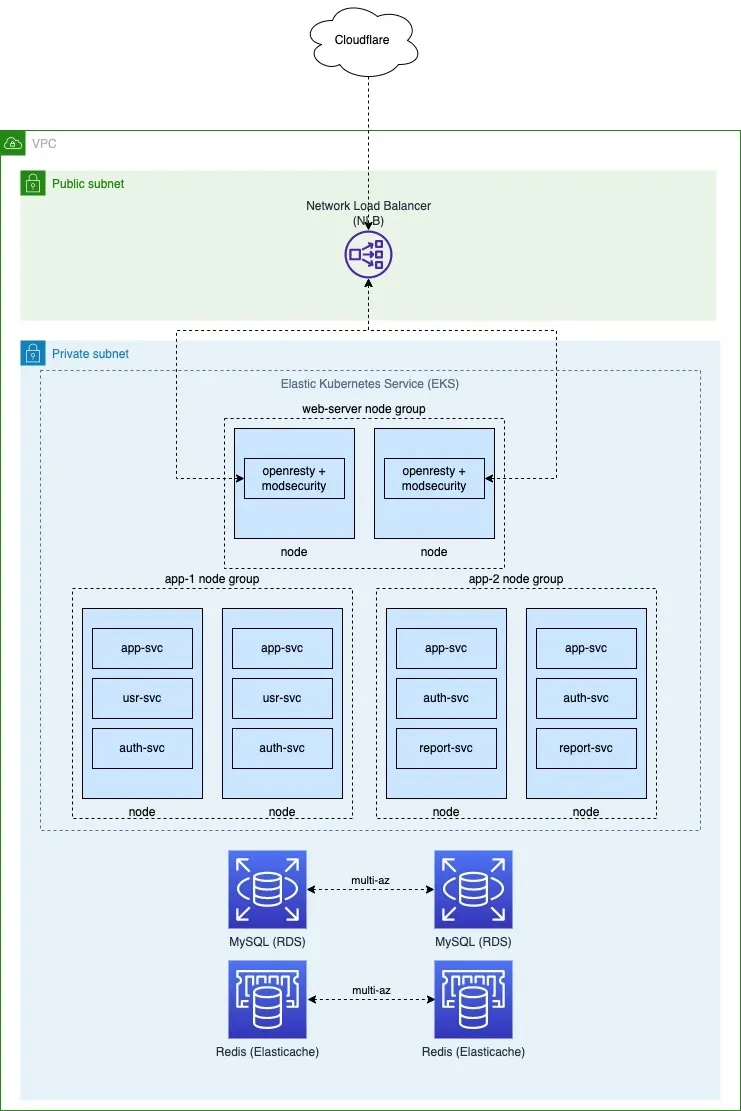

For the simplicity sake, the diagram below shows the overview of the architecture of a kubernetes cluster.

Network

If you look carefully at the diagram below, you will realise that all the node groups, databases, redis are located in private subnets with the exception of the network load balancer (NLB) which is located in the public subnets.

By provisioning our node groups in the private subnet, we can ensure that the traffic goes out to the internet via the NAT gateway which has an elastic IP assigned to it.

This allows us to whitelist the NAT gateway IP in third-party APIs easily as compared to nodes in public subnets where each node will have it's own IP address.

Node Groups

The nodes are spread across the different availability-zones (AZ) in the region for high-availability (HA).

Each application with it's own sets of services should have it's own node groups to ensure each application have it's own node resources. This allow our applications to scale fast when the Horizontal Pod Autoscalers (HPA) are triggered as it doesn't have to wait for the node group to scale up to be sufficient in capacity for scheduling in event that other applications are scaling too.

I have the preference of using spot instances for my node groups to reduce the cost of my cluster. As my node groups are provisioned in the private subnets, I do not have to worry about IP whitelisting as my outgoing IP will still remain the same.

However, it's advisable for your default node group to be provisioned as on-demand instances as your kubernetes cluster may contain critical deployments that is not stateless. Please review your own cluster and make the appropriate adjustment as necessary.

Web Server (Routing)

There are many options for web server in kubernetes such as nginx-ingress, envoy, service meshs like istio, etc.

As my current and previous job have applications which uses up to thousands of domains, I prefer to move this out to it's own dedicated deployment for ease of managing the configurations. If you do not have so many domains to manage, you might wish to use easier options like the one mentioned above.

I have a personal preference for openresty (https://openresty.org/en/) as my web server due to the presence of LuaJIT which allows me to build and customize my web routing with Lua. It has a package manager OPM (https://opm.openresty.org/) which contains many third-party packages that can extend the functionality of the web server.

The inclusion of ModSecurity allows me to have a WAF in place to further protect my applications from attacks. My setup includes the OWASP ModSecurity Core Rule Set (https://owasp.org/www-project-modsecurity-core-rule-set/) which is a set of generic attack detection rules to protect against many common attack categories, including SQL Injection, Cross Site Scripting, Local File Inclusion, etc.

If you look closely at the diagram below, the openresty serves as a reverse proxy to the internal services via kubernetes internal networking (xxx.svc.cluster.local).

Load Balancer

The openresty is then exposed as a service as a network load balancer (NLB). As the openresty is already a layer-7 proxy, there is no need for us have another layer-7 layer (application) on the load balancer level. Thus, I chose a NLB to perform load balancing using the layer-4 layer (TCP) on the OSI model, which provide a very high performance compared to ALB.

To further increase the security of our infrastructure, we can whitelist the IPs of our CDN in the security group of the NLB.

Cloudflare

For international traffics, Cloudflare is the best choice for CDN and ddos mitigation. We will turn on the Cloudflare proxy for our dns records that are pointed to the NLB which we have provisioned.

Final Thoughts

By designing my kubernetes cluster in the above setup, I have a very resilient architecture in place with plenty of room to scale.